Decided to merge it all into one post. Indexes for references are not merged, but resets after each list.

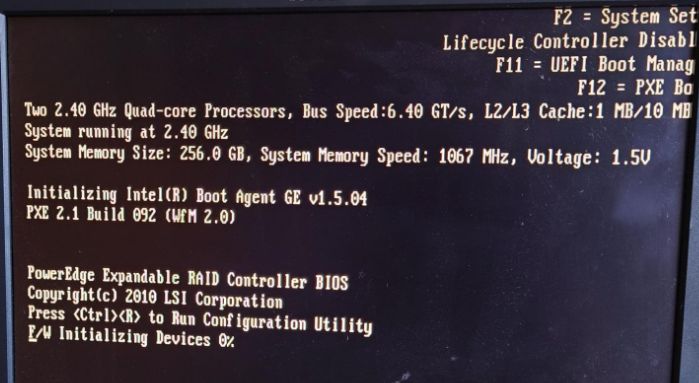

I acquired a used Dell PowerEdge T620, with 2.5 bays, 2x Xeon E5-2609, and 256GB memory.

There is some discrepancies between the supported disks according to the documentation, and what people are reporting that actually works. Then again, the latest firmware is likely written after the documentation was released.

The RAID controller included is H700.

Considered having M.2 drives connected to a PCIe adapter, but bifurcation isn’t supported before T630, and RAID seems tricky there.

!In the interest of playing it a bit safe, I want to go with what most likely works well, as I don’t have enough parts to test with.

I am reasonably hopeful that 4x 1TB Samsung SSD 870 EVO will be supported, “worst case” with the 800GB it’s said to actually support (as in -200GB usable each).

In the hope of avoiding issues later, the first major step is to make sure everything is fully up-to-date. That was a bit of a challenge. Dell’s site has a lot of updates, in both .exe, .EXE (uhm?), and .bin format. The first ones usually uploaded, but failed installing. The latter wouldn’t even upload.

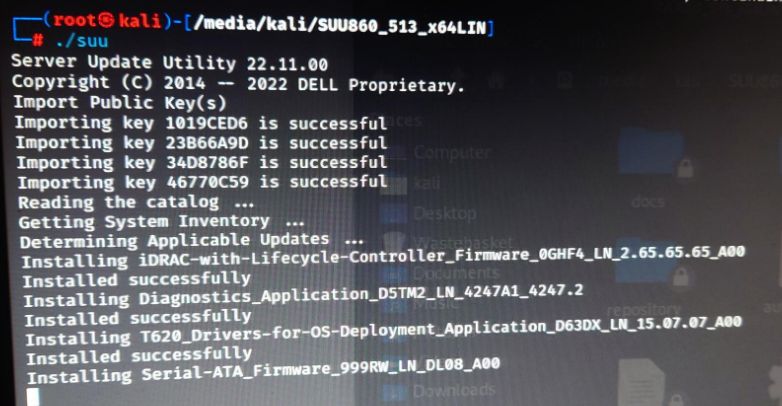

The solution in the end was to download a 13GB iso file[0][1], mount it and run ./suu from Linux running on the server.

The process took quite a while, but seemingly took care of everything on its own.

Sadly, it made the fans go from quiet to holy shit. For others as well[2].

Luckily, there’s a well explained Reddit post in r/homelab[3] that shows how the ipmitool command can be used to control the fans. For some reason the options within iDRAC/BIOS were really poor. The low/high threshold had the auditory difference of what I thought was the max speed, and me physically sitting on the case so it doesn’t slowly glide across the floor.

The commands I went with:

# In order to actually enable manual fan control

$ ipmitool -I lanplus -H <idrac_ip> -U <user> -P <pass> raw 0x30 0x30 0x01 0x00

# To alter the speed

$ ipmitool -I lanplus -H <idrac_ip> -U <user> -P <pass> raw 0x30 0x30 0x02 0xff 0x14

$ ipmitool -I lanplus -H <idrac_ip> -U <user> -P <pass> raw 0x30 0x30 0x02 0xff 0x0f

# The last part is the percent in hex.

# 0x14 = 20

# 0x0f = 15

# To read current values

$ ipmitool -I lanplus -H <idrac_ip> -U <user> -P <pass> sdr list full

This really should be so much easier to manage. Considering that my credentials allowed me to do it, I don’t really see a great reason it’s not present in the iDRAC user interface. But in any case, this is kinda fancy too. Might have to set up some automated command based on the current temps to adjust it dynamically.

[0] https://www.dell.com/support/kbdoc/da-dk/000123359/dell-emc-server-update-utility-suu-guide-and-download?lang=en

[1] https://www.dell.com/support/home/en-us/drivers/driversdetails?driverid=hg3xt&oscode=ws8r2&productcode=poweredge-t620

[2] https://www.dell.com/community/PowerEdge-Hardware-General/T620-fan-became-noisy-after-updating-BIOS-and-firmware/td-p/5092038

[3] https://www.reddit.com/r/homelab/comments/7xqb11/dell_fan_noise_control_silence_your_poweredge/

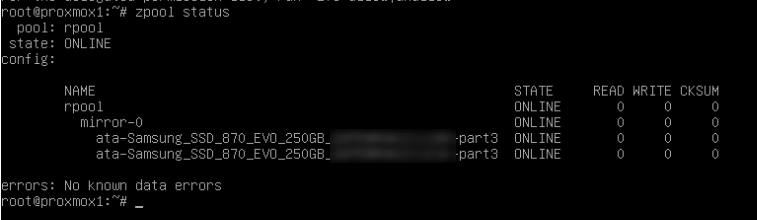

I will be using ZFS, with 2 parity drives for the 4x 1TB drives. Mirrored 250G for Proxmox itself.

Turns out, H700 does not support non-RAID drives. Seemingly not even with firmware flashing. While it is possible to create two single-SSD RAID0’s, the OpenZFS documentation[1] explicitly says not to.

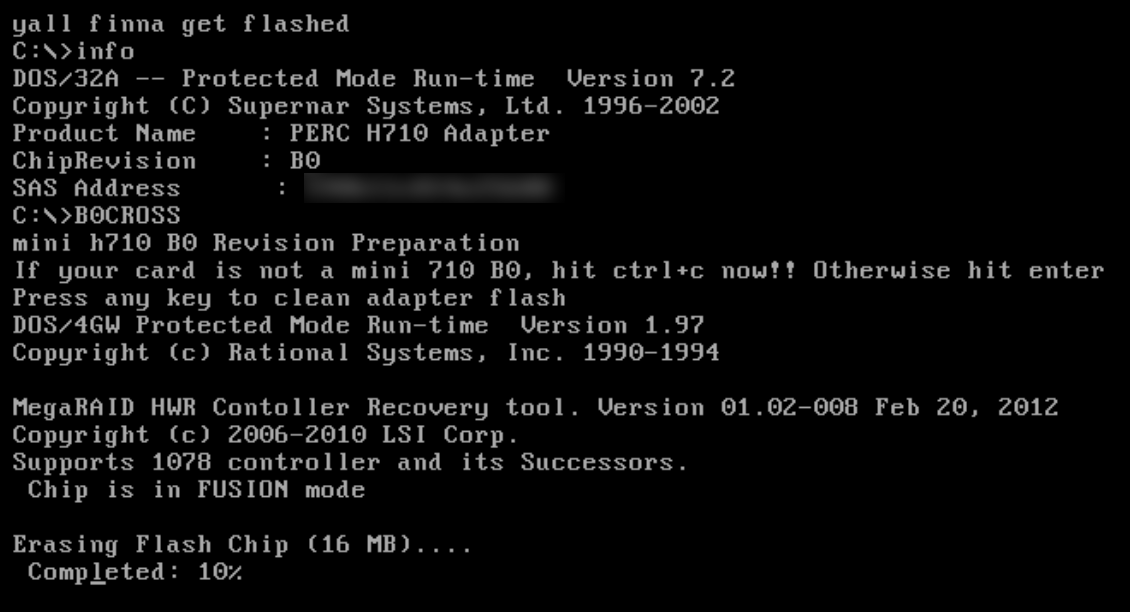

Some weeks after a trip to eBay, my H710 arrived. This by itself does not seem to solve all my challenges, but it is listed as a card that supports being flashed.

As such, I followed a guide[2], and tada, physical disks (IT mode) is now supported. A bit closer to the goal.

Just for the record, I yolo’d a bit, as it was NOT an exact match like the guide says it needs to be. But from what I can tell, it very much worked.

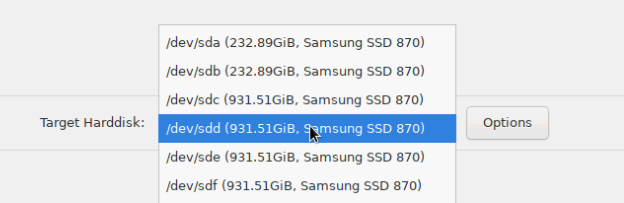

With the physical drives supported, the Proxmox VE installer made it very simple to set up a mirrored raid with ZFS.

Although, after the installation was completed, nothing booted. I suspected this was due to the ZFS file system not being recognized. Tried EXT4 instead. That didn’t work either.

Turns out, I missed a key part of the manual. Flashing boot image is not optional when you plan to boot from the adapter. After that single command was run, and a reboot, I was able to boot Proxmox.

Though, this was now EXT4 proxmox, so I installed it once again, with RAID1 ZFS.

And thus, Proxmox is successfully installed and working the way I hoped for.

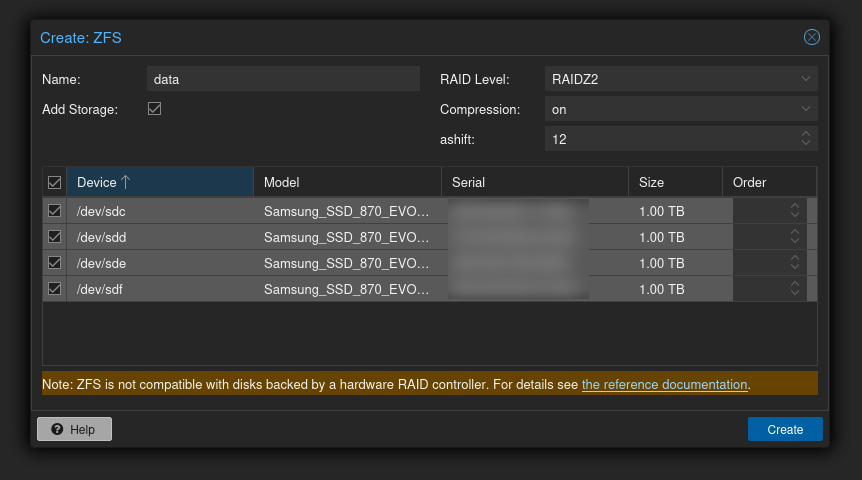

The remaining 4 drives are set up with RAIDZ2, allowing 2 disks to fail. Possibly overkill, time will show if I need more space soon, or drives keeps failing.

Had a backplate warning. Fixed by properly plugging in one of the cables on the controller.

This[3] might’ve helped the ridiculus fan speed, but still using the commands mentioned earlier to make it reasonably quiet.

[0] https://www.youtube.com/watch?v=l55GfAwa8RI

[1] https://openzfs.github.io/openzfs-docs/Performance%20and%20Tuning/Hardware.html#hardware-raid-controllers

[2] https://fohdeesha.com/docs/H710-D1-full.html

[3] https://www.reddit.com/r/homelab/comments/dinl06/im_sure_this_has_been_posted_before_disable_3rd/

This one was the biggest challenge.

Started out wanting OPNSense virtualized within Proxmox. It’s absolutely one way to do it, but I really want to be able to shut down or patch Proxmox without my network going down.

Ended up buying the Protectli Vault (VP2420). It seems pretty good, and has 2.5Gb support.

I have decided to do with 10/8 for my network. Both because it’s easy to type, and it features a lot of IPs. For now, I will go with about 10 VLANs. Both separating general “home” network and the lab/server, and also different systems within it.

Based on my understanding so far, it comes down to tagging. The tag being a number which is included in IP frames, more specifically 802.1Q. While it can be directly associated with the IP design (an octet), it is no requirement. A frame going to 10.1.2.3 can be tagged with 1337 if desired.

I want to help my brain out a bit, so the third octet in my IPs correspond to the VLAN ID. 10.0.10.0 is the network address for VLAN 10. The second octet is reserved for different sites in the future.

This could be done with a script, but then seemingly with some workarounds.

First, remove the need to enter a password after executing the enable command. Second, entering the SSH password for each run/session without typing it.

(es) #configure

(es) (Config)#aaa authorization exec default local none

sshpass -p secret_password ssh -tt user@es < script_file

Snippets from the script, the official documentation has generally been the most helpful here.

vlan database

vlan 50

vlan name 50 lab

vlan routing 50

interface 0/1

description server-trunk

vlan tagging 2-4092

vlan participation include 10,20,50,60,70,80

vlan participation exclude 1,30,35,40

exit

The amount of changes I expect to do, might not justify these steps for now. I have a script that covers the entire setup, but copy/pasted the commands in bulk. Works great.

One slight annoyance is that while the management IP is set from CLI on the mgmt VLAN interface, it’s still displayed as DHCP in the web interface. Actually setting it in the webui breaks everything for me, so I am accepting it being functional but presenting wrong.

interface vlan <mgmt>

ip address 10.0.<mgmt>.2 255.255.255.0

exit

ip default-gateway 10.0.<mgmt>.1

exit

network mgmt_vlan <mgmt>

This has made it available only from the mgmt IP, but it shows 192.168.1.2/24 in the web. Luckily I rarely need to use that. DHCP is not on any physical interface I can find. In the webui it says There are no DHCP Server Pools configured, so this remains a mystery. But it works fine otherwise, so I will try to not let this annoy me too much.

For simplicity I currently have one cable, which is the trunk. I will have 3 later, one for iDRAC, mgmt, and trunk.

A solution for this[0], where a VM is using vmbr0 and then with the respective VLAN tag:

auto eno1

iface eno1 inet manual

auto vmbr0

iface vmbr0 inet static

bridge-ports eno1

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4092

auto vmbr0.<mgmt>

iface vmbr0.<mgmt> inet static

address 10.0.<mgmt>.10/24

gateway 10.0.<mgmt>.1

With that, the foundation is ready.